FrontLLM Introduction

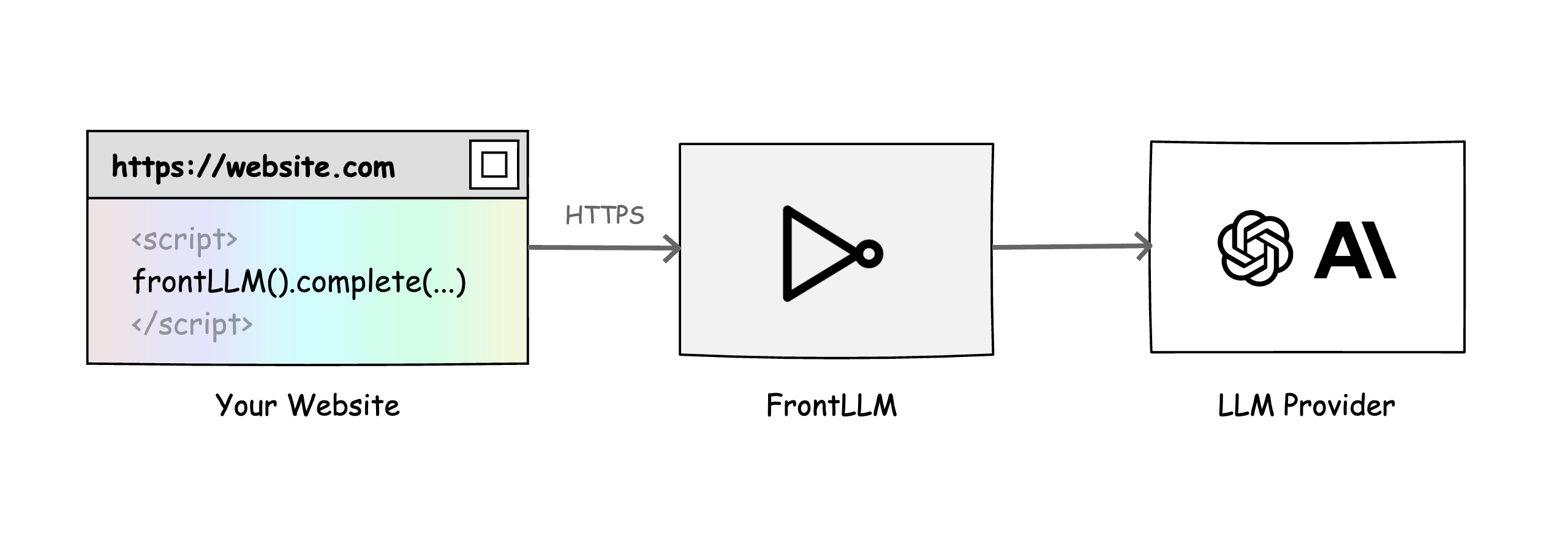

FrontLLM is your public gateway to LLMs. Request LLM directly from your front-end code. No backend needed. FrontLLM supports rate limiting, usage tracking, and more. It works with any front-end framework, including React, Vue, and Angular.

🚀 Installation

NPM

To use FrontLLM in your project, you can install it via npm:

npm install frontllm

Now you can import the library and create an instance of the gateway with your specific gateway ID:

import { frontLLM } from 'frontllm';

const gateway = frontLLM('<gateway_id>');

CDN

To use FrontLLM via CDN, you can include the following script tag in your HTML file:

<script src="https://cdn.jsdelivr.net/npm/frontllm@0.1.1/dist/index.umd.js"></script>

This will expose the frontLLM function globally, which you can use to create an instance of the gateway:

<script>

const gateway = frontLLM('<gateway_id>');

// ...

</script>

🎬 Usage

Chat Completion:

// Short syntax - requires the default model configured in the gateway

const response = await gateway.complete('Hello world!');

// Full syntax

const response = await gateway.complete({

model: 'gpt-4',

messages: [{ role: 'user', content: 'Hello world!' }],

temperature: 0.7

});

// Output the generated response text to the console.

console.log(response.choices[0].message.content);

Chat Completion with Streaming:

// Short syntax - requires the default model configured in the gateway

const response = await gateway.completeStreaming('Where is Europe?');

// Full syntax

const response = await gateway.completeStreaming({

model: 'gpt-4',

messages: [{ role: 'user', content: 'Where is Europe?' }],

temperature: 0.7

});

// Output the generated response text to the console.

for (;;) {

const { finished, chunks } = await response.read();

for (const chunk of chunks) {

console.log(chunk.choices[0].delta.content);

}

if (finished) {

break;

}

}